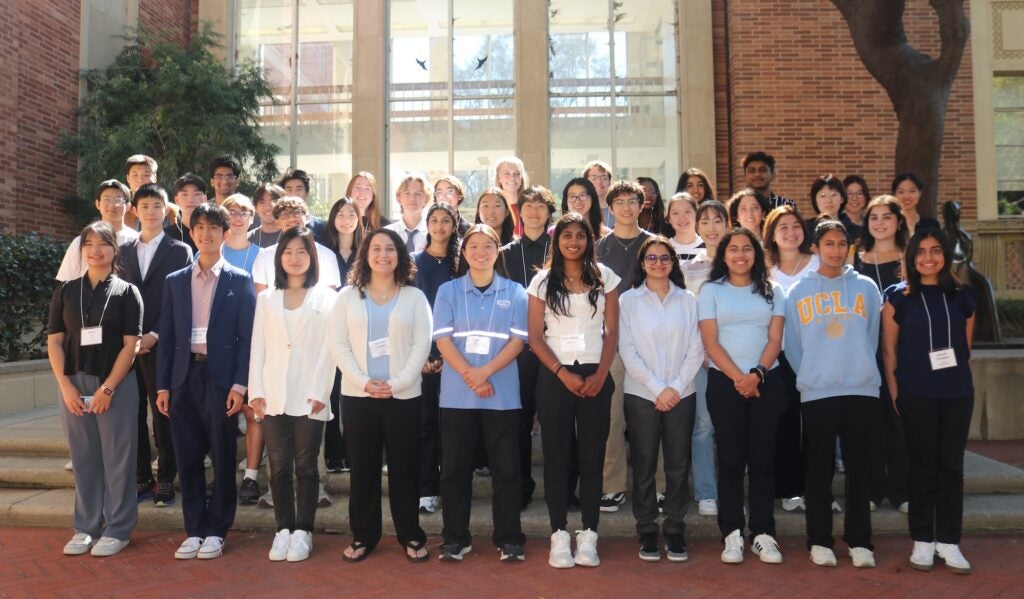

Forty-six undergraduate students across 14 majors participated in the inaugural hackathon.

The rapid adoption of Artificial Intelligence (AI) across higher education has quickly transformed learning. Both instructors and students are actively reconsidering how they deliver instruction and support their own educational development in this new environment. On a local level, insights from the recent 2025 UCLA Senior Survey reveal UCLA students’ varied experiences using AI for learning. The data also highlight that many students have begun to think critically about how to use AI in their education and are seeking further guidance on how to evaluate these tools.

“Students are at the forefront of AI in education,” explained Susan Swarts, Administrative Director of UCLA DataX, a strategic initiative exploring data science’s intersections with justice and society. “They experience the benefits and the pitfalls of the current technology in their lives. They have valuable knowledge and can provide examples and immediate suggestions about the impact of AI on their education.”

Forty-six undergraduate students across 14 majors had an opportunity to consider how policy might guide AI’s development during DataX’s inaugural policy hackathon. Co-Designing Care: A Policy Hackathon for Technology and the Public Good brought together interdisciplinary student groups for two days of designing policy proposals across categories such as Discrimination in Automated Systems, Automation in Education, and Agentic AI and Mental Health. The groups created actionable frameworks addressing how data and technology might shape society based on the shared values of care, accountability, and working toward the public good.

Developing a Cognitive Integrity Framework

The hackathon’s Automation in Education category prompted teams to examine how AI is transforming aspects like assessment, instructional tools, and personal learning outcomes for students across K-12 and higher education. Groups surveyed the state of educational guidance around the technology and developed evidence-based frameworks to ensure the technology’s future use ultimately elevates student learning. Angela Fentiman, director of communications and creative services for the Teaching and Learning Center (TLC) and one of the category’s judges, noted that student participants were committed to preserving their intellectual development when using AI for learning.

“Students recognized the need for a thoughtful approach and collective guiding principles as we strive to better understand the relationship between AI and higher education,” Fentiman said. “The hackathon participants were grappling with how they would acquire needed skills from their educational experience while also learning to use new technology effectively and ethically.”

The category’s winning policy proposal came from the team Ethical Technology & Analytics (ETA) Lab, which included fourth year cognitive science major Elena Yu, first-year Statistics & Data Science major Ted Zhang, and first-year math and computation major Alan Dai. Their policy brief advocated for a Cognitive Integrity Framework aimed at ensuring AI learning systems strengthen student agency and high-order thinking.

In addressing AI’s role across education, ETA Lab’s proposal pointed to a lack of unified education policy regulating how these systems impact students’ critical thinking skills. They warned that without direction learners may become more adept at using AI tools but will struggle with generating original thoughts or critical decision-making skills.

“As students, we’re living the transition in real time, watching AI go from a helpful tool to something that is quietly restructuring how we think, learn, and do academic work,” the ETA Lab team members shared. “We’ve seen classmates use AI to accelerate learning, but also to bypass it.”

The team believes detailed educational policy standards creating technological transparency, enhanced learner protections, and better AI literacy will ensure that these tools work to enhance problem-solving skills rather than be sources for answer generation.

“We hope policymakers recognize that AI in education is not just a technology issue – it’s a cognitive one,” ETA Lab explained. “If we design it as a thinking partner and cultivate the users’ correct mindset of AI in education, we can expand human intelligence instead of substituting for it.”

On a local campus level, the team hopes that instructors will play a key role in demonstrating to students how to think for themselves alongside AI tools.

“AI delivers answers –– instructors cultivate thinkers,” ETA Lab members emphasized. “They are the ones who spark intellectual curiosity, model scholarly values, and guide students in developing the habits of mind that last far beyond any technology. AI does not replace the educator; it elevates the importance of their role.”

As for the participants, Swarts believes that the hackathon offered students an opportunity to participate in analyzing AI’s future impact while also making key connections to continue this work on campus.

“I hope they met new people and expanded their network,” she noted. “I hope the students now have a deeper understanding of the relationship between technology and society and that they also know they have agency in shaping that relationship for the public good. I also hope students know that DataX is a resource for them and will continue to engage with us.”